Johannes Lampel - Projects/SOMVis

The idea of this project was to train a Self Organizing Map ( short german description, wikipedia(EN), wikipedia (DE)) using the spectral data of a song, and then play again a song, determine the activation of all neurons, display those activations and then see if an observer can identify any relation between the song and the reaction of the SOM, maybe even for a song the SOM has not been explicitly trained for.

Winamp provides a plugin interface

which already delivers the frequency data. I used 144 components of it, therefore

we already have one 144 dimensional vector per frame. Since the plugin is running

at 30Hz and most effects in music are a bit longer, I decided to store this

data and use a 'sliding window' to give the SOM the data from the last 16 frames.

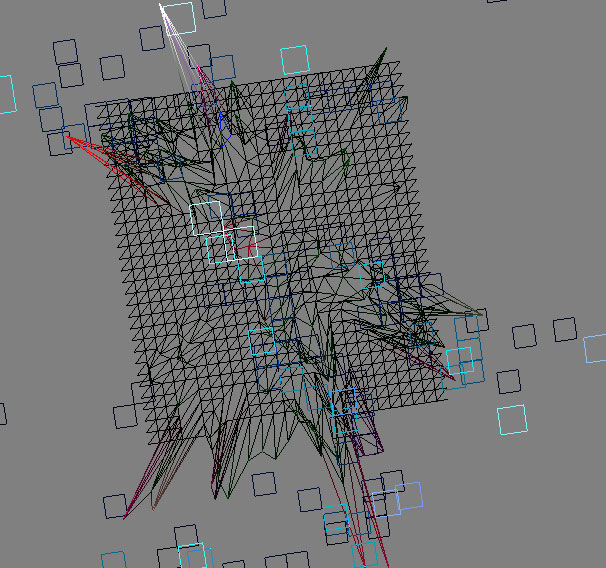

Then a SOM was trained, the size of the SOM was 27x30 here, simply because we

have to be able to do the recall in realtime. It isn't a square SOM, because

you often have better training results with non-square SOMs. Having a 4 minute

song, this results in 4*60*30-16 = 7184 pattern, each of them being 144*16*4

bytes big, since I was using single precision floating point numbers, that is

a total of 66MB of training data which takes a lot of time. Additionally the

SOM itself has a size of 27*30*144*16*4 Byte = 7.5MB, which does not fit into

a cache of a CPU these days. You especially notice this during replay and it

is the main reason for size limitations of the SOM.

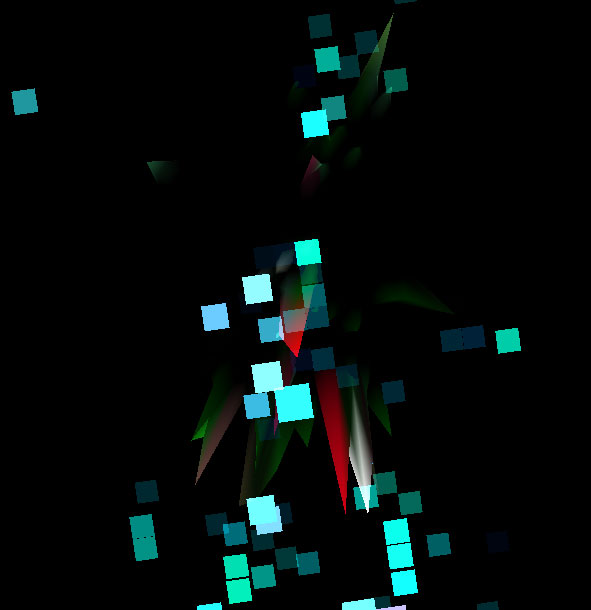

During replay we collect the frequency data like before training, and then give

it to the SOM, which determines the neuron which has the smallest distance to

the input, determine the 'winner'. For this neuron a peak is drawn and a quad

is pushed up. the peak decays fast, the quad slowly moves down. This was just

to make it look a bit nicer, the same for the colors. To fill the screen in

a nice way, the same objects are displayed, and optionally scaled according

to the current loudness.

Result : It is possible to see that similar sounds are located

at similar locations, and sometimes it is possible to get nice results with

songs the SOM hasn't been trained for, but in most cases those songs have to

be from the same 'category'. ...

Current problems are to run the application at a fixed framerate, which is sometimes

problematic, because the categorization of the SOM already takes up a lot of

CPU time. With some songs the maxima jump between a lot of different points.

I thought about solving this by only allowing the winner to move one neuron

from the last winner away ( which would also speed up the training ) when training

the data straight from the beginning to the end. Or another component representing

some sort of time could be introduced.

Source :

somvis.zip